Frequency Penalty

What is Frequency Penalty?

Frequency Penalty ranges from -2.0 to 2.0 and affects how the model penalizes new tokens based on their frequency in the text.

- Positive values: Decrease the likelihood of repeating the same lines by penalizing frequent tokens.

- Negative values: Increase the likelihood of repetition.

For reducing repetition slightly, values between 0.1 to 1 are typical. Higher values up to 2 can significantly suppress repetition but may lower sample quality.

Adjusting the Frequency Penalty

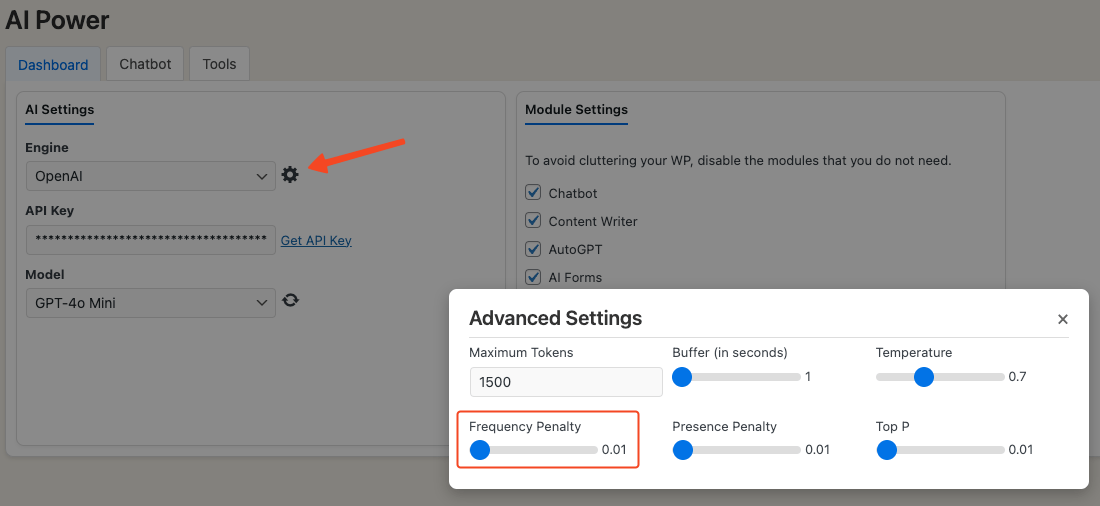

The default Frequency Penalty is 0.01. You can change it in the AI Settings tab.

Steps to change the Frequency Penalty:

- Go to the plugin menu on your WordPress dashboard.

- Click on the Dashboard page and find the AI Settings tab.

- Enter a new value in the Frequency Penalty field.

Frequency Penalty's Impact on Generated Text Diversity

Frequency Penalty controls the "diversity" of the generated text.

- Higher values: Encourage novel or less common words.

- Lower values: Keep text more similar to training data.

Values between 0 and 1 balance familiar and novel words.

- 0: No penalty, usual behavior.

- 1: Generates entirely novel or random text.

Use higher values for more diverse and less repetitive text.

Differences Between Frequency and Presence Penalty

- Frequency Penalty: Penalizes words seen frequently during training.

- Presence Penalty: Penalizes words present in the input text.

Both increase text diversity but in different ways. Depending on your needs, you might use one or both.

Frequency Penalty modifies the probability of words seen frequently during training, making them less likely. Presence Penalty modifies the probability of words in the input text, making them less likely to repeat in the output.

Using both can help control the diversity and novelty of the generated text.