Top_P

What is Top_P?

Top_P, also known as nucleus sampling, controls the diversity of the generated text by only considering tokens with the highest probability mass.

- Top_P = 0.1: Only tokens within the top 10% probability are considered.

- Top_P = 0.9: Considers tokens within the top 90% probability.

OpenAI recommends using either temperature sampling or nucleus sampling, but not both.

Adjusting the Top_P Setting

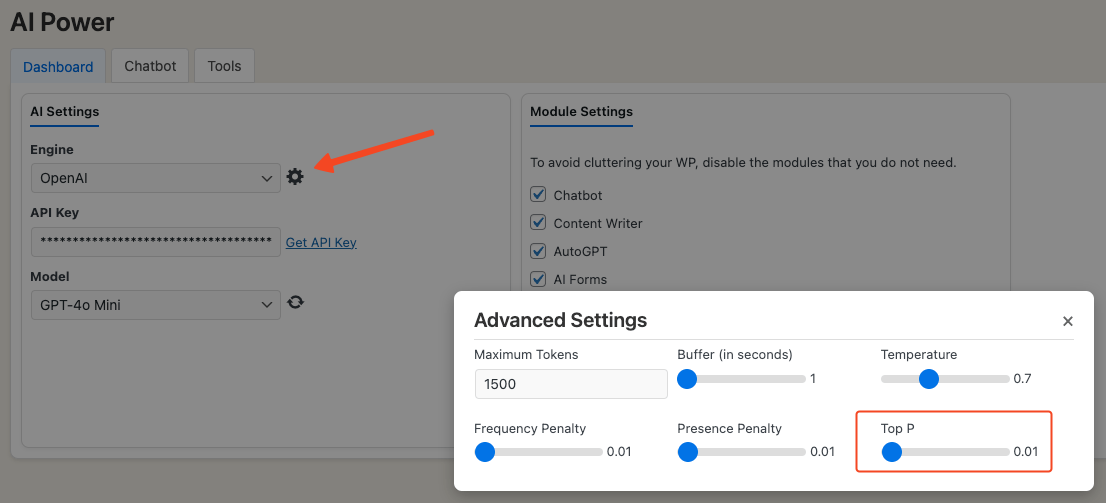

The default Top_P value is 0.01. You can change it in the AI Settings tab.

Steps to change the Top_P:

- Go to the plugin menu on your WordPress dashboard.

- Click on the Dashboard page and find the AI Settings tab.

- Enter a new value in the Top_P field.

Balancing Diversity in GPT Text Generation

Top_P controls how many of the highest-probability words are included in the generated text.

- Lower Top_P value: More diverse text.

- Higher Top_P value: More repetitive or "safe" text.

Increasing Top_P makes the model produce more conservative text by considering only the most probable outcomes, leading to less diversity. A lower Top_P value makes the model take more risks, generating more diverse text but with a higher chance of nonsensical sentences.

Summary

- Higher Top_P: Generates safer, more repetitive text.

- Lower Top_P: Generates more diverse, riskier text.

Adjust the Top_P parameter to control the level of "risk" the model takes when generating text.