Maximum Length

What is Maximum Length?

Language models process text by dividing it into tokens, which can be words or groups of characters.

For example, "fantastic" splits into "fan", "tas", and "tic", while "gold" is a single token. Many tokens start with a space, like " hi" and " greetings".

The number of tokens depends on the input and output text length.

- 1 token ≈ 4 characters in English

- 1 token ≈ ¾ words

- 100 tokens ≈ 75 words

- 1-2 sentences ≈ 30 tokens

- 1 paragraph ≈ 100 tokens

- 1,500 words ≈ 2,048 tokens

The combined length of the text prompt and generated completion must not exceed the model's maximum context length, usually 4096 tokens (about 3000 words). Prices are based on 1,000 tokens, which is about 750 words.

Comparing Token Limits

Understanding token limits helps in selecting the right model for your tasks. Here’s a comparison of the maximum tokens for various models:

| Model Group | Model | Maximum Length |

|---|---|---|

| GPT-4 Models | gpt-4 | 8,192 |

| gpt-4o | 4,096 | |

| gpt-4-32k | 32,768 | |

| GPT-3.5 Models | gpt-3.5-turbo | 4,096 |

Adjusting the Maximum Length Setting

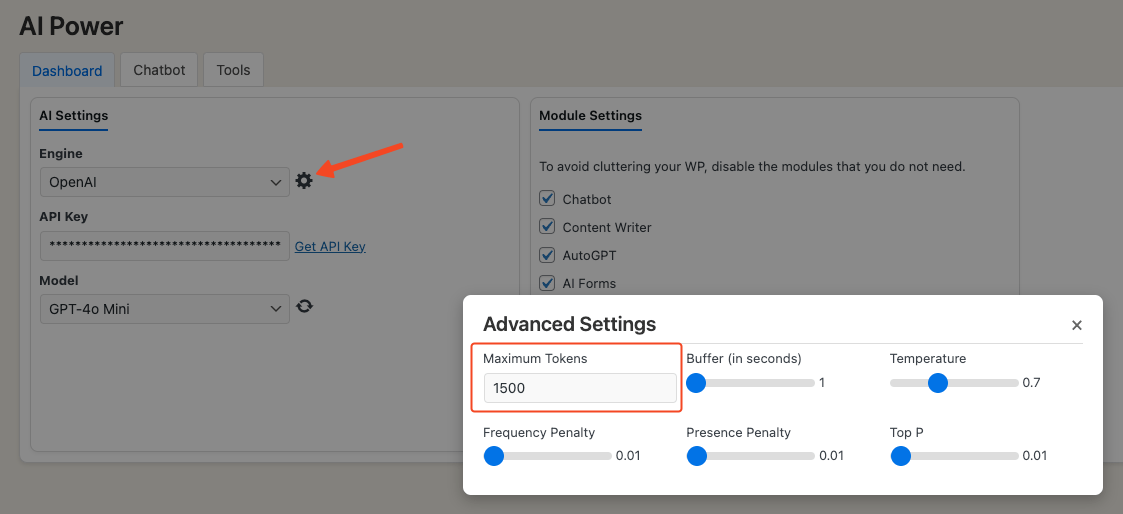

The default Maximum Length is 1500. You can change it in the AI Settings tab.

Steps to change the Maximum Length:

- Go to the plugin menu on your WordPress dashboard.

- Click on the Dashboard page and find the AI Settings tab.

- Enter a new value in the Maximum Length field.

Experiment with different values to find the best setting.

Impact of Max Tokens Value on Generated Content

Max Tokens controls the maximum number of tokens generated in a single call to the GPT model. It limits the output size.

- Useful for generating specific amounts of text.

- Helps fit generated text into specific formats or applications.

- Can improve model performance.

However, setting Max Tokens too low can lead to incomplete sentences or grammatically incorrect text.

Max Tokens are important in scenarios like:

- Generating a specific amount of text.

- Constraining text to fit a format.

- Improving performance.

Be careful not to set it too low to avoid incomplete thoughts and grammatical errors.