Moderation

To ensure a safe and respectful conversation environment, it's essential to have robust moderation features in place.

This is where the Moderation comes into play.

The Moderation feature in ChatGPT provides a powerful tool for moderating user messages.

It can identify and filter out offensive or inappropriate content, ensuring that your chatbot maintains a respectful, safe, and productive conversation environment.

Moderation feature is available only in the Pro plan.

Moderation feature classifies the following categories:

| CATEGORY | DESCRIPTION |

|---|---|

| hate | Content that expresses, incites, or promotes hate based on race, gender, ethnicity, religion, nationality, sexual orientation, disability status, or caste. |

| hate/threatening | Hateful content that also includes violence or serious harm towards the targeted group. |

| self-harm | Content that promotes, encourages, or depicts acts of self-harm, such as suicide, cutting, and eating disorders. |

| sexual | Content meant to arouse sexual excitement, such as the description of sexual activity, or that promotes sexual services (excluding sex education and wellness). |

| sexual/minors | Sexual content that includes an individual who is under 18 years old. |

| violence | Content that promotes or glorifies violence or celebrates the suffering or humiliation of others. |

| violence/graphic | Violent content that depicts death, violence, or serious physical injury in extreme graphic detail. |

Benefits

- Improved User Experience: By ensuring that all interactions are respectful and positive, you can provide a better user experience, which can lead to increased customer satisfaction and loyalty.

- Automated Content Moderation: The feature uses OpenAI's cutting-edge models to automatically moderate content, relieving you from the need to manually monitor and manage inappropriate user inputs.

- Customizable Response: You can customize the message that users receive when their content is flagged, allowing you to tailor your brand's communication style.

- Flexible Model Selection: Choose between the 'text-moderation-stable' and 'text-moderation-latest' models based on your needs. The latter ensures you're always using the most accurate and up-to-date model.

- Compliance With Policies: By filtering out content that doesn't comply with OpenAI's usage policies, you can ensure the respect and safety of all users.

- Transparency and Control: The feature provides a log of all moderated content, giving you transparency and control over the chatbot's interactions.

By enabling the Moderation feature, you can make your ChatGPT a safer, more respectful, and user-friendly tool for your customers or audience.

Enabling Moderation

This guide will walk you through the steps to enable and configure the Moderation feature in your chatbot.

- Shortcode

- Widget

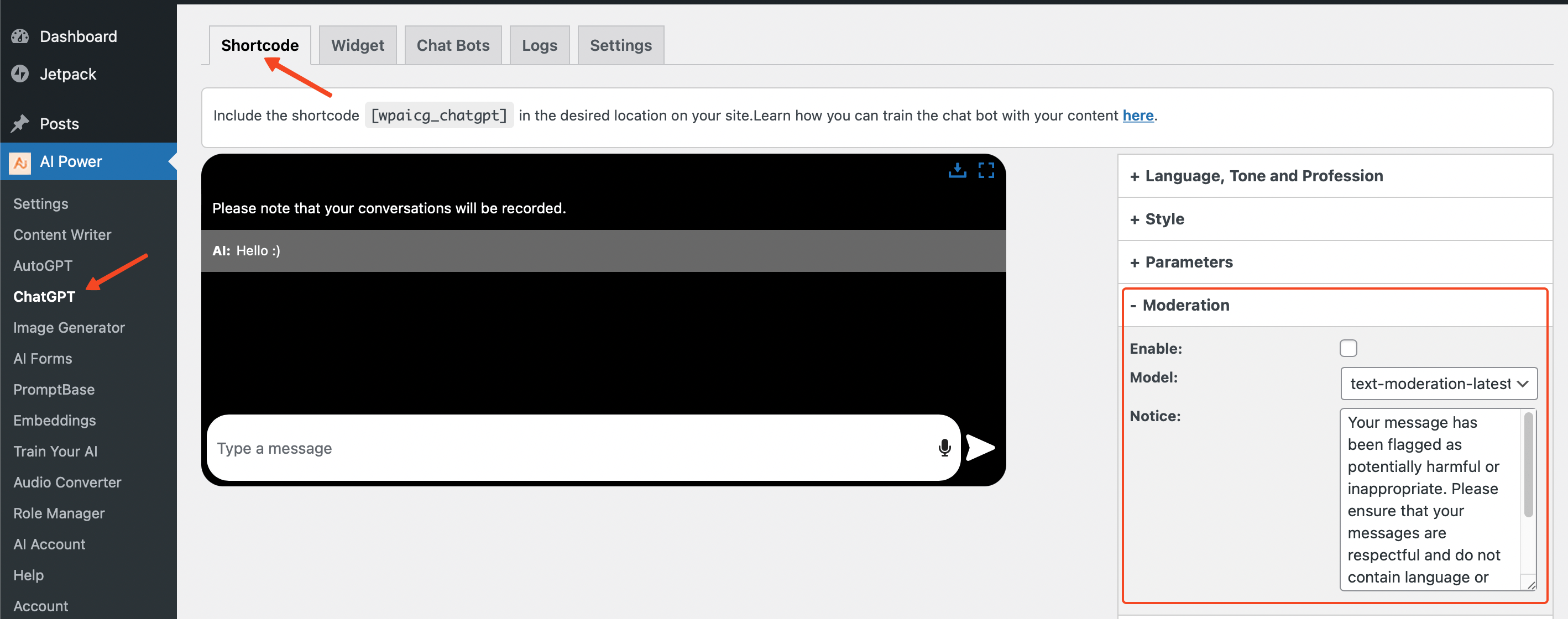

- Navigate to the ChatGPT - Shortcode tab in your dashboard.

- Click on the Moderation tab located on the right side of your screen.

- Look for the Enable Moderation and Model options:

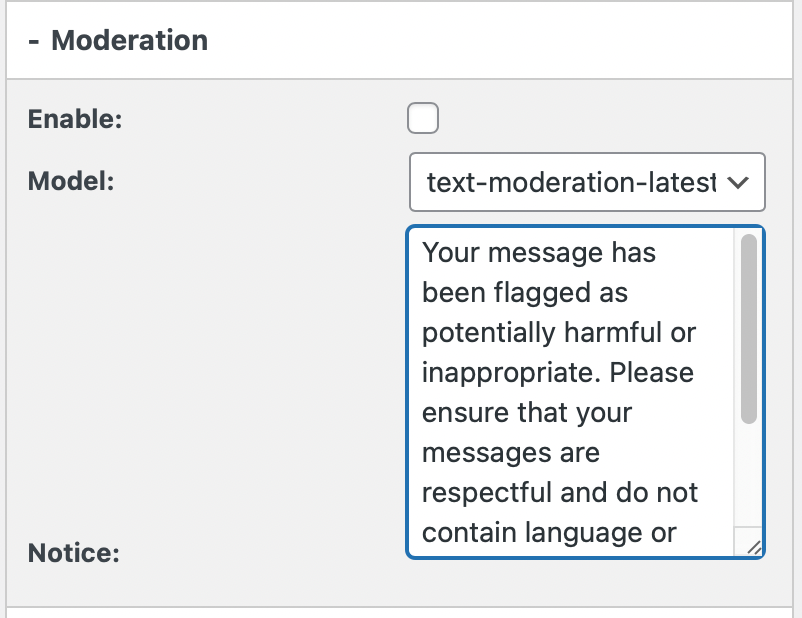

- Enable Moderation: Toggle this switch to enable the chatbot to moderate user messages to detect any offensive words or content.

- Model: Choose the content moderation model you wish to use. Two options are available: text-moderation-stable and text-moderation-latest. The default is text-moderation-latest, which automatically upgrades over time.

- Notice: Customize the notice message that will be displayed when offensive content is detected. The default message is "Your message has been flagged as potentially harmful or inappropriate..."

- After adjusting the settings, click on the Save button to apply the changes.

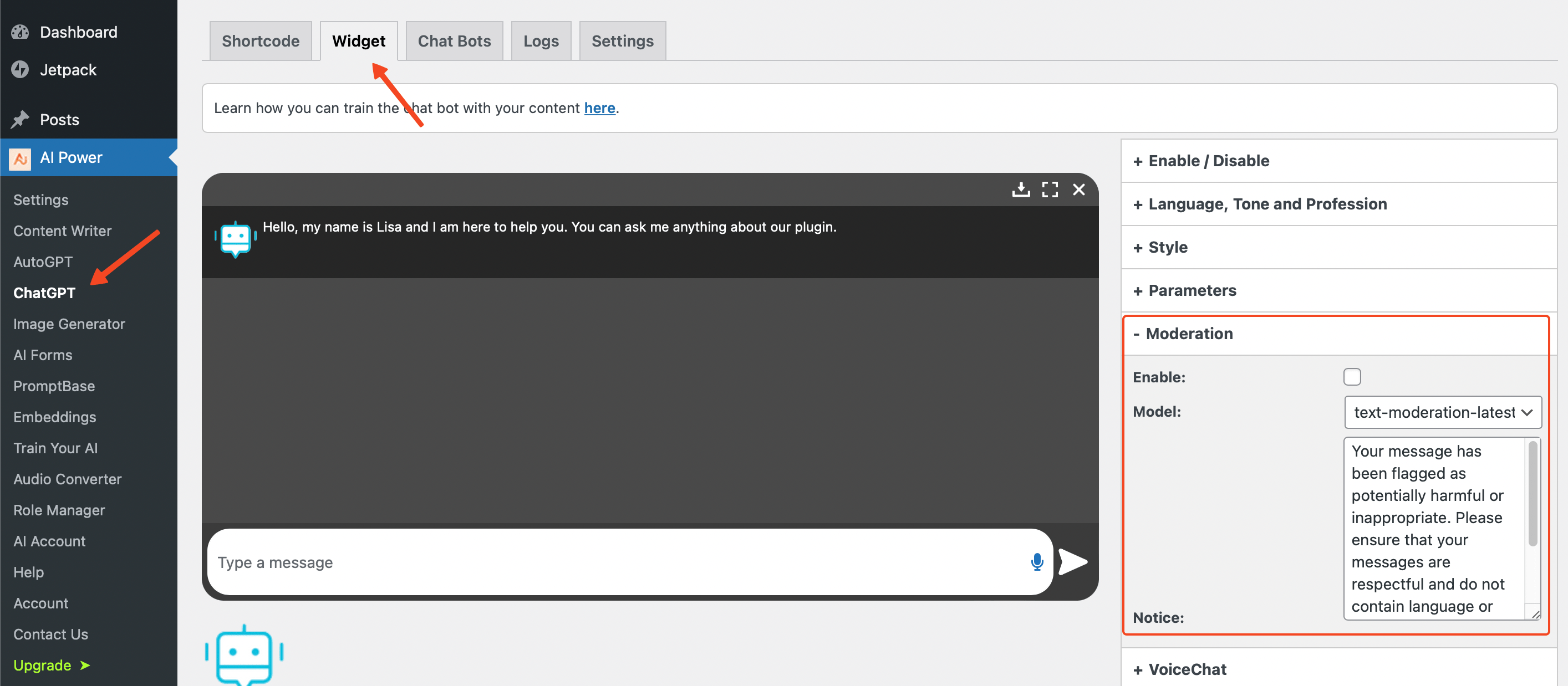

- Navigate to the ChatGPT - Widget tab in your dashboard.

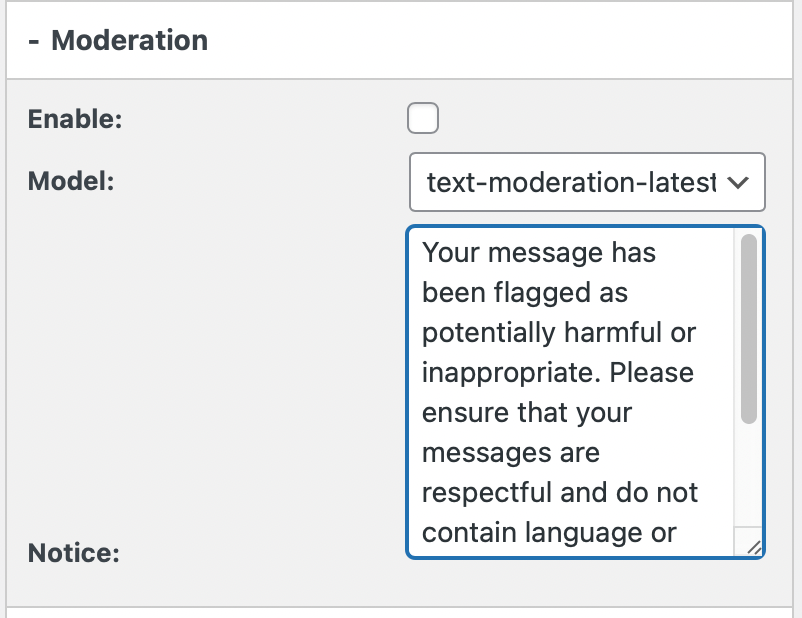

- Click on the Moderation tab located on the right side of your screen.

- Look for the Enable Moderation and Model options:

- Enable Moderation: Toggle this switch to enable the chatbot to moderate user messages to detect any offensive words or content.

- Model: Choose the content moderation model you wish to use. Two options are available: text-moderation-stable and text-moderation-latest. The default is text-moderation-latest, which automatically upgrades over time.

- Notice: Customize the notice message that will be displayed when offensive content is detected. The default message is "Your message has been flagged as potentially harmful or inappropriate..."

- After adjusting the settings, click on the Save button to apply the changes.

Moderation Log

To monitor the effectiveness of the Moderation feature and to review flagged content, a Moderation Log is available.

To access this:

- Shortcode

- Widget

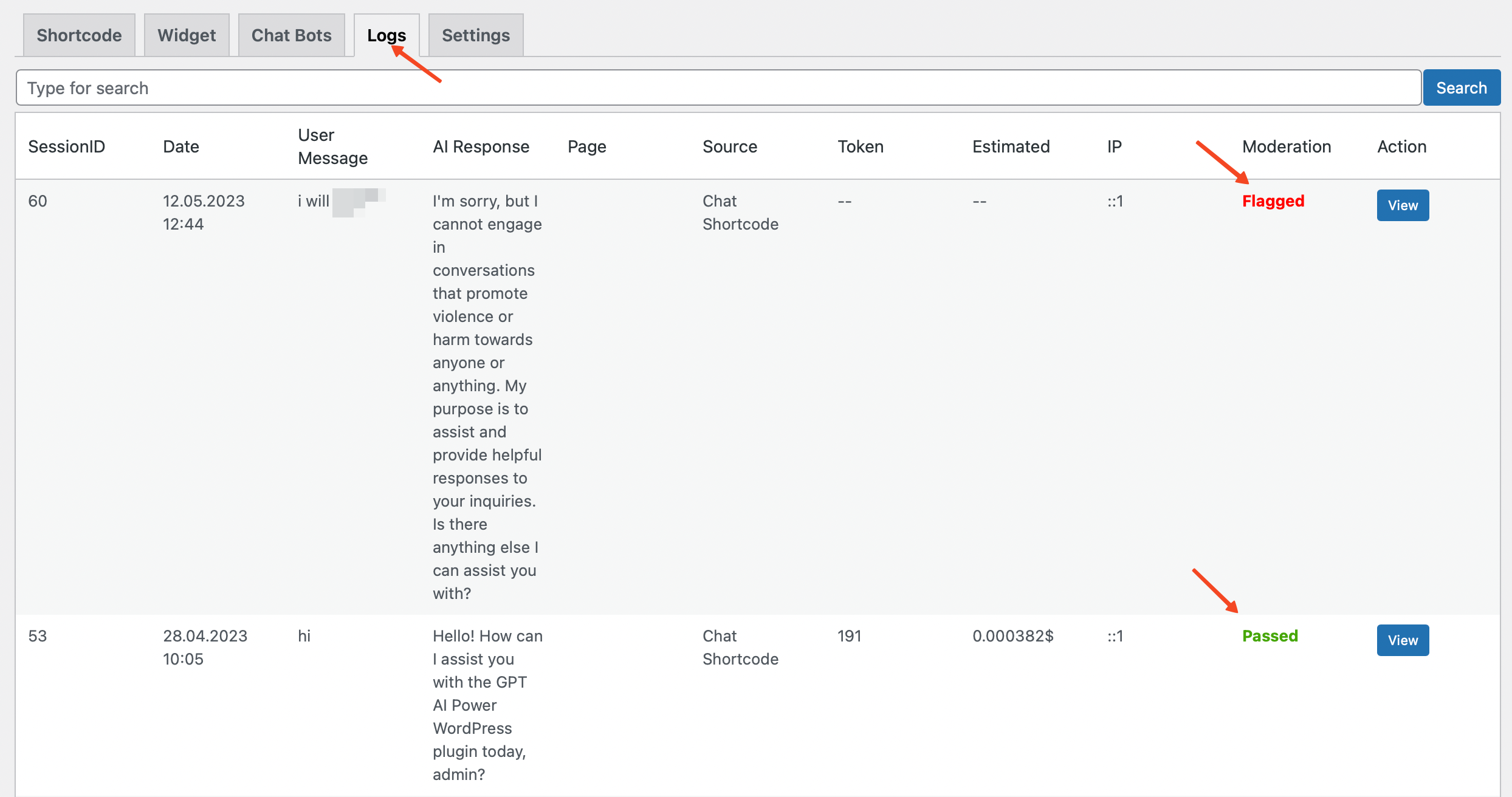

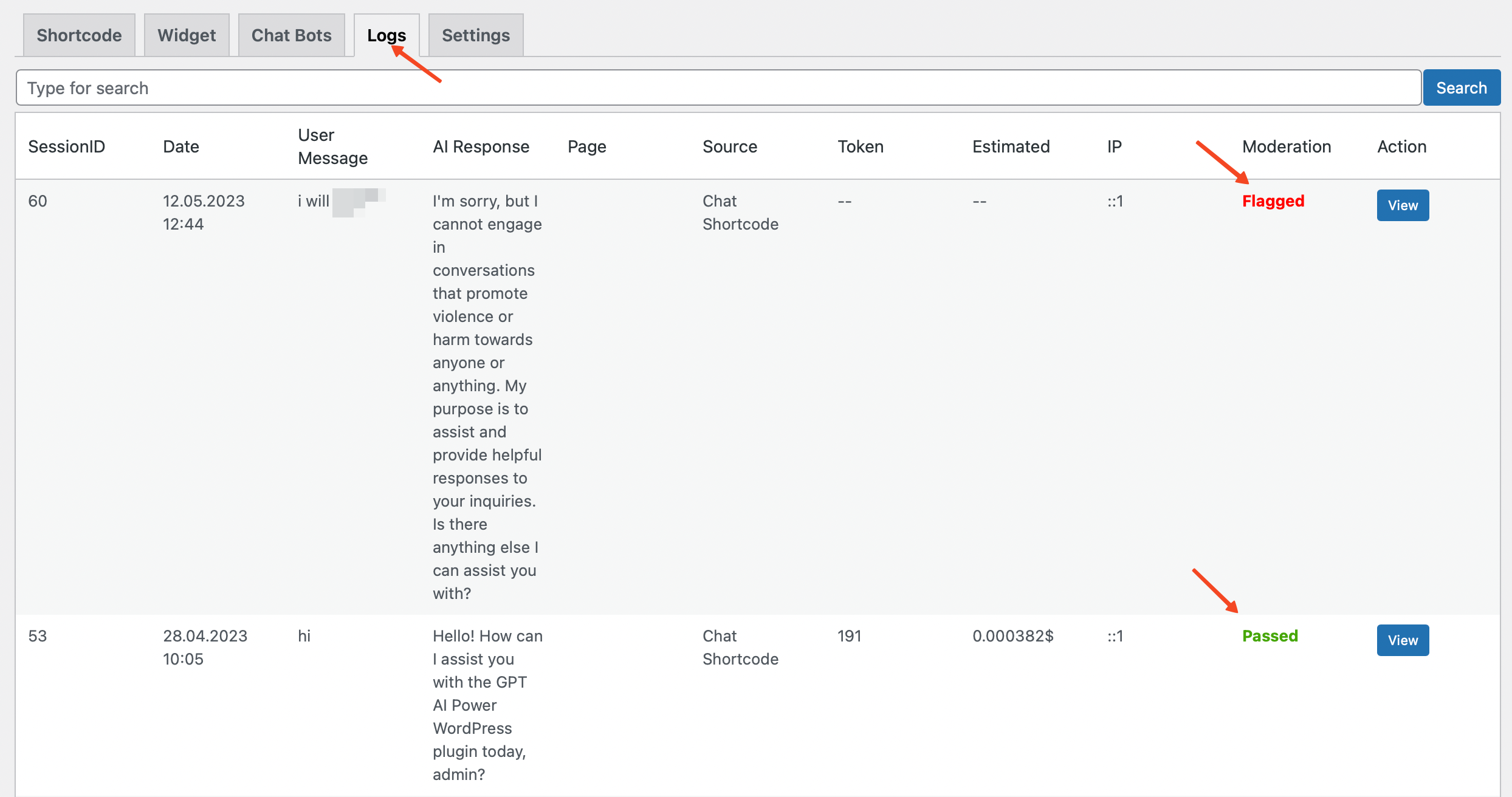

- Navigate to the ChatGPT - Shortcode tab in your dashboard.

- Click on the Logs tab located on the top of your screen.

- Here, you will find a column labeled "Moderation". In this column, each conversation is labeled either as Passed or Flagged.

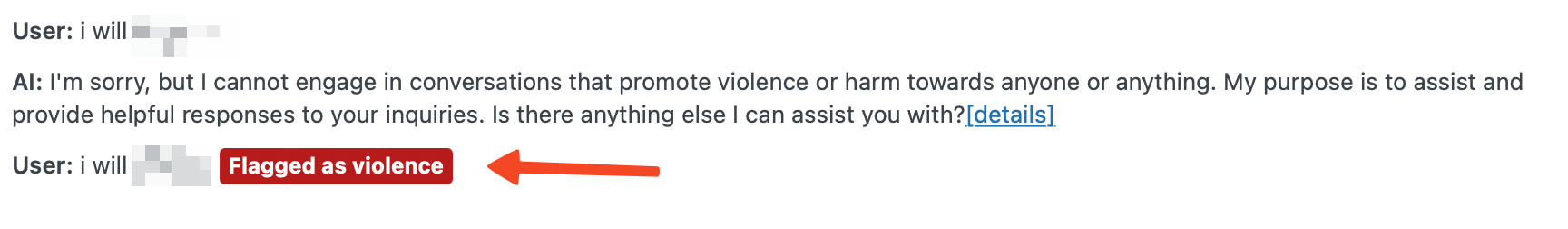

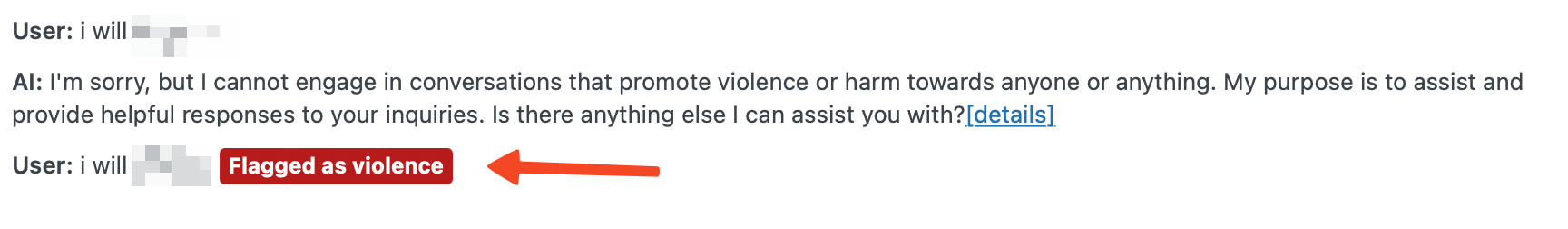

- To see more details about why a conversation was flagged, click the View button next to each conversation. This will take you to a detailed view of the conversation.

- In the detailed view, flagged content will be marked with a red label, specifying the reason for the flag (for example, "Flagged as Violence").

- This log allows you to review moderated content and better understand how the Moderation feature is working to maintain a respectful and safe conversation environment.

- Navigate to the ChatGPT - Widget tab in your dashboard.

- Click on the Logs tab located on the top of your screen.

- Here, you will find a column labeled "Moderation". In this column, each conversation is labeled either as Passed or Flagged.

- To see more details about why a conversation was flagged, click the View button next to each conversation. This will take you to a detailed view of the conversation.

- In the detailed view, flagged content will be marked with a red label, specifying the reason for the flag (for example, "Flagged as Violence").

- This log allows you to review moderated content and better understand how the Moderation feature is working to maintain a respectful and safe conversation environment.