Voice Chat

With our chatbot, we understand the importance of creating a more interactive and engaging experience for your users.

Therefore, we've integrated with top-notch voice technologies to elevate your chatbot interaction.

- Voice Input: For voice input, we've integrated with Whisper, which is a Speech-to-Text model from OpenAI. With this feature, your users can speak directly to the chatbot using their microphone, and the chatbot will transcribe and process their spoken words as if they were typed messages.

- Voice Output: You can enable voice output for your chatbot with our integrations with ElevenLabs and Google Text to Speech. This allows your chatbot to read out its responses, creating a more dynamic and conversational experience for your users.

By combining these features, you can create a seamless voice chat experience for your users, making your chatbot more accessible and intuitive to use.

Voice Input

You can enable or disable the voice input.

If you enable this option, the chatbot will display a microphone icon.

When the user clicks on the microphone icon, the chatbot will start listening to the user.

The user can speak to the chatbot and the chatbot will convert the speech to text. You can customize mic and stop button color.

Whisper Integration

Whisper is an advanced Automatic Speech Recognition (ASR) system developed by OpenAI.

It's designed to convert spoken language into written text, and it's trained on a large amount of multilingual and multitask supervised data collected from the web.

Integrating Whisper with our chatbot can elevate the user experience, providing a hands-free mode of interaction that is particularly useful for users who are visually impaired, have motor disabilities, or simply prefer voice interaction.

Here's how you can enable and customize the Whisper integration:

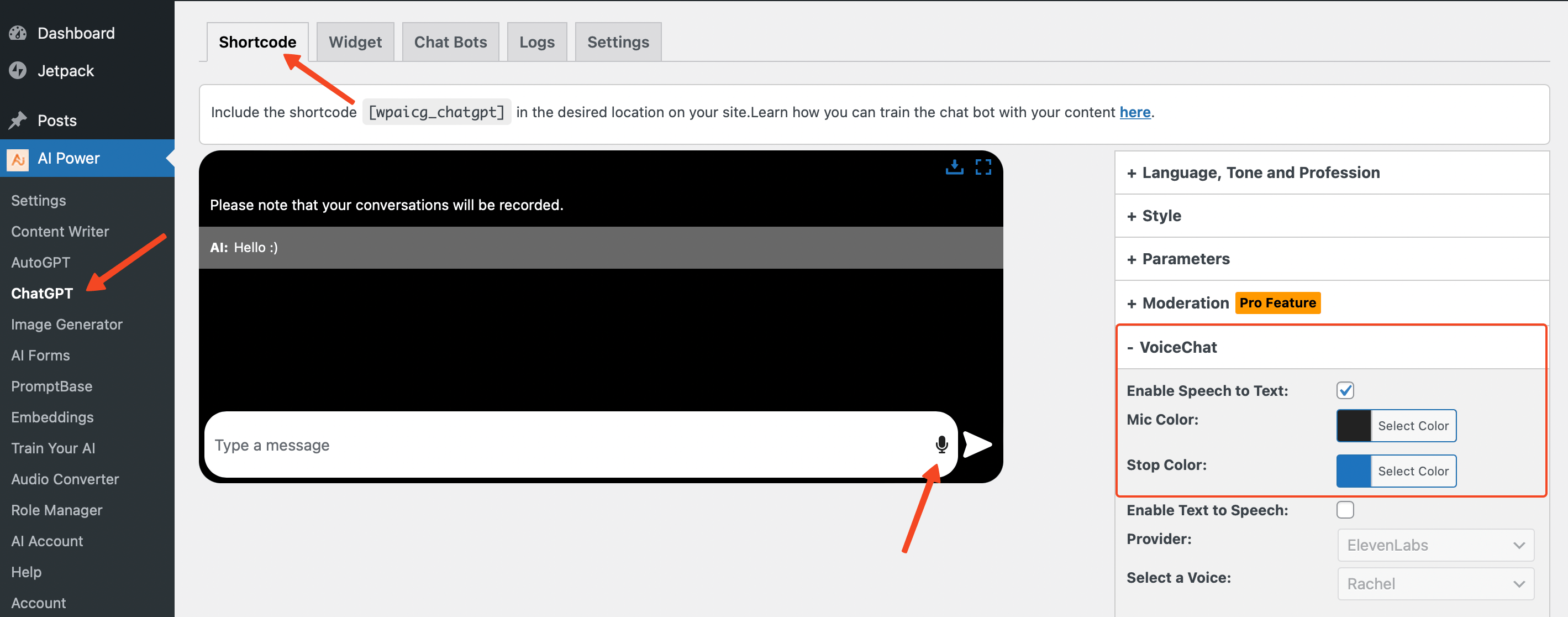

- Shortcode

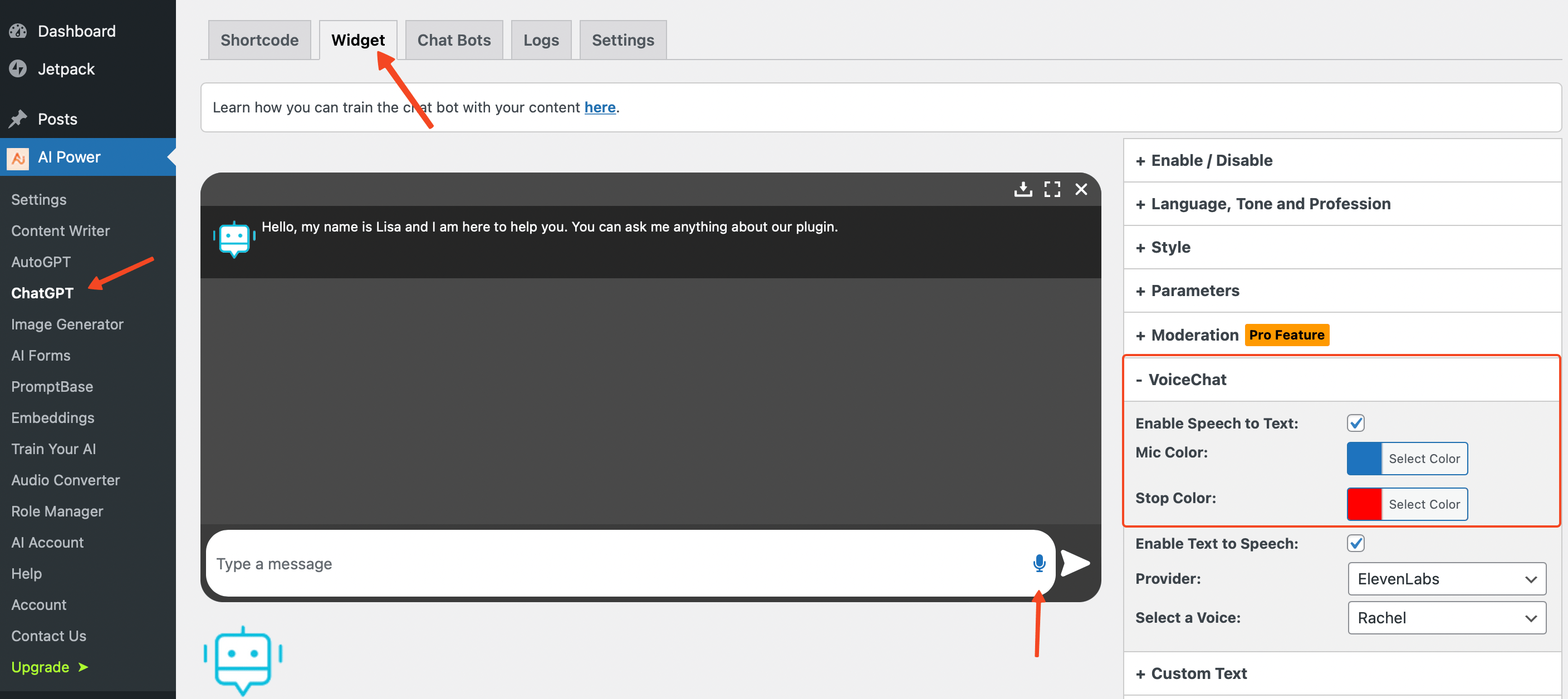

- Widget

- Navigate to the ChatGPT - Shortcode tab in your dashboard.

- Find the VoiceChat tab located on the right side of your screen.

- Here, you will find several settings related to managing voice input:

- Enable Text to Speech: Toggle this switch to enable or disable voice input. When enabled, a microphone icon will appear in the chatbot text message field, allowing users to speak their messages.

- Mic Button Color: This setting allows you to customize the color of the microphone icon.

- Stop Button Color: This setting allows you to customize the color of the stop button that appears when the chatbot is actively listening.

- After adjusting the settings, click on the Save button to apply the changes.

- Navigate to the ChatGPT - Widget tab in your dashboard.

- Find the VoiceChat tab located on the right side of your screen.

- Here, you will find several settings related to managing voice input:

- Enable Text to Speech: Toggle this switch to enable or disable voice input. When enabled, a microphone icon will appear in the chatbot text message field, allowing users to speak their messages.

- Mic Button Color: This setting allows you to customize the color of the microphone icon.

- Stop Button Color: This setting allows you to customize the color of the stop button that appears when the chatbot is actively listening.

- After adjusting the settings, click on the Save button to apply the changes.

Voice Output

OpenAI

Our plugin now includes support for OpenAI's state-of-the-art Text-to-Speech (TTS) technology. This feature allows you to convert written text into lifelike spoken audio, enhancing the interactive experience of your ChatGPT bot.

Key Features and Capabilities:

- Multiple Voices: Choose from six built-in voices: alloy, echo, fable, onyx, nova, and shimmer, to find the perfect tone for your audience.

- High-Quality Audio Options: Select between the standard

tts-1model for lower latency or thetts-1-hdmodel for higher quality audio. - Supported Output Formats: The default audio format is MP3, but you can also choose from OPUS, AAC, or FLAC, depending on your needs.

- Opus: Ideal for internet streaming and communication, offering low latency.

- AAC: Perfect for digital audio compression, widely used in platforms like YouTube, Android, and iOS.

- FLAC: Best for lossless audio compression, preferred by audiophiles for archiving.

- Voice Speed Control: Adjust the speed of the generated audio to suit your needs. Select a value from 0.25 to 4.0, with 1.0 being the default speed. This allows for greater flexibility in matching the pace of speech to your specific requirements.

- Language Support: While the TTS voices are optimized for English, the model supports a wide range of languages, following the Whisper model's language capabilities.

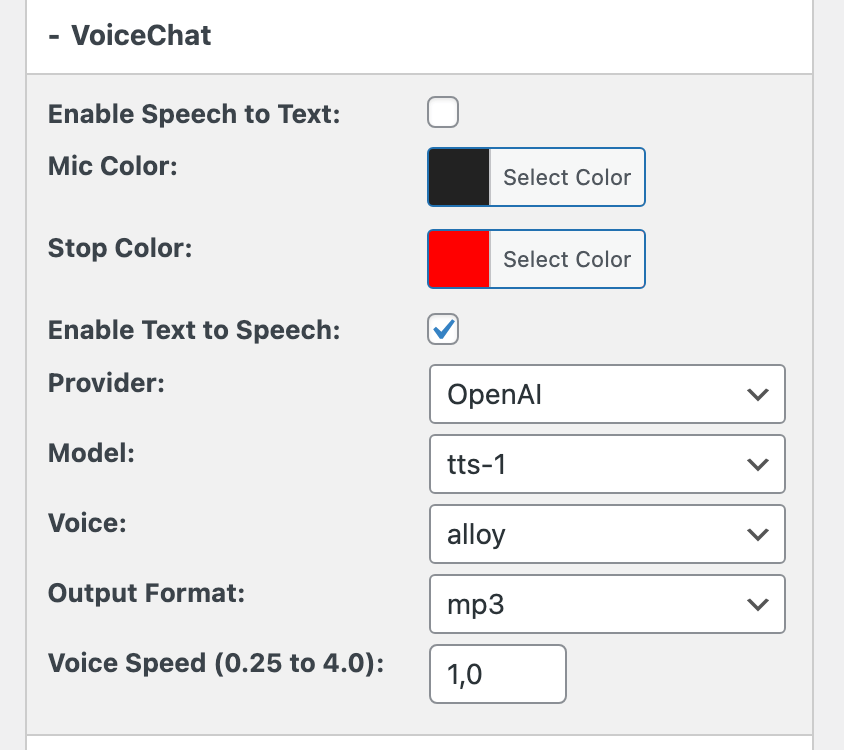

Usage:

To enable this feature, follow these steps:

- Access your chat bot settings and locate the "VoiceChat" tab.

- Tick the "Enable Text to Speech" option.

- Choose "OpenAI" as your provider from the dropdown menu.

- Select your preferred model, voice, output format, and voice speed.

- Click "Save" to apply the changes.

That's all! Now, interact with your chat bot and enjoy the new voice capabilities. Your bot is ready to speak!

With OpenAI's Text-to-Speech technology, you can now bring a new level of realism and engagement to your ChatGPT bot's interactions.

Google

Google Text-to-Speech API is a service that allows you to convert text into natural-sounding speech.

Powered by Google’s leading AI technologies, it offers high-fidelity speech, a vast selection of voices, voice customization, and much more.

Features of Google Text-to-Speech:

- High-fidelity Speech: Google’s groundbreaking technologies generate human-like speech, delivering voices of near-human quality.

- Wide Voice Selection: Choose from a set of 380+ voices across 50+ languages and variants, including Mandarin, Hindi, Spanish, Arabic, Russian, and more.

- Custom Voice: Train a custom voice model using your own audio recordings to create a unique voice for your organization.

- Voice Tuning: Personalize the pitch of your selected voice and adjust your speaking rate.

Google provides new customers with $300 in free credits to spend on their Text-to-Speech service. However, please be aware that costs will apply beyond these initial credits. For a detailed breakdown of costs, please refer to Google’s official pricing page. In terms of limitations, voice customization and language options might vary depending on your region and the specific features of the Google Text-to-Speech service.

Here's how you can enable and customize the Google integration:

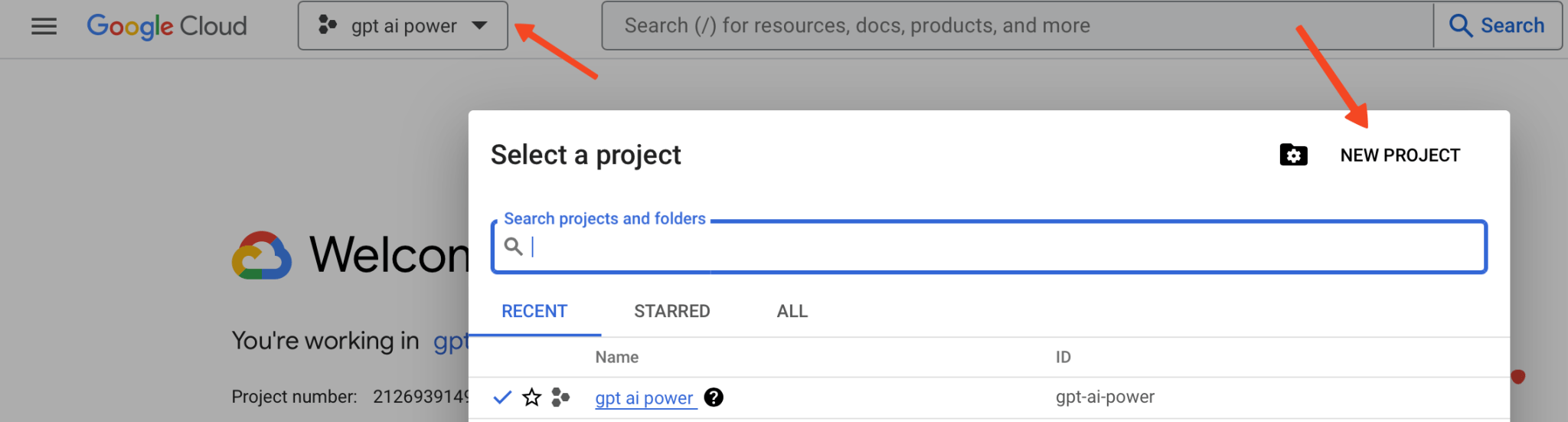

- Navigate to the Google Cloud Console.

- Create a new project or you can use one of your existing project.

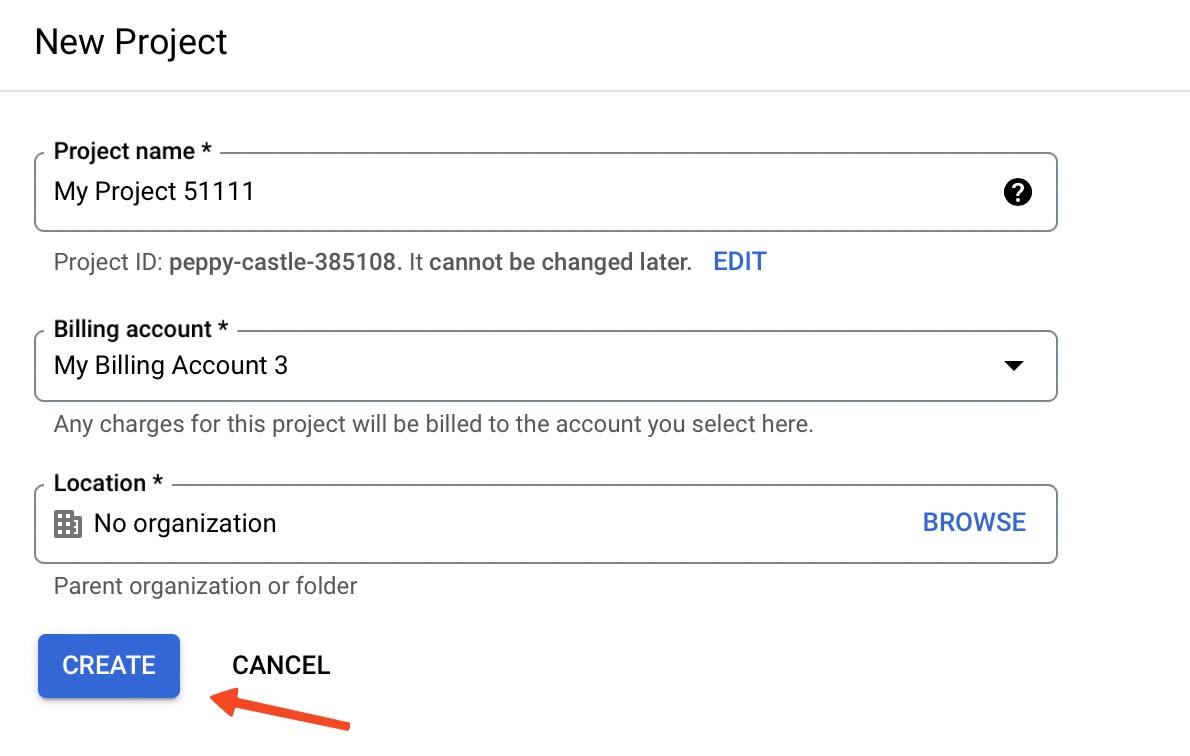

- Give your project a name and then hit Create button.

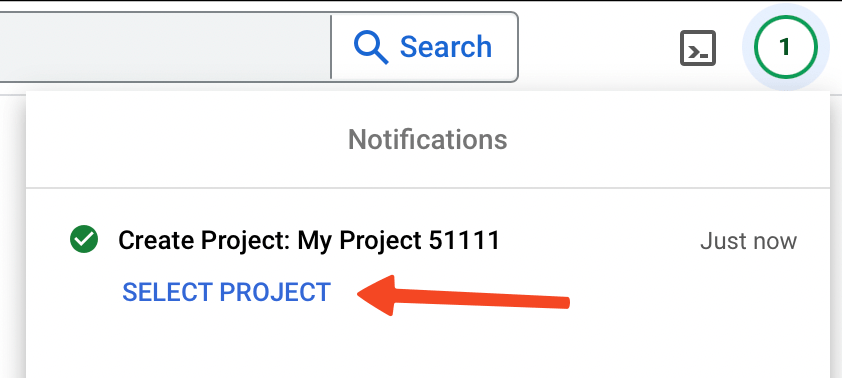

- After it has been created, make sure that you select it.

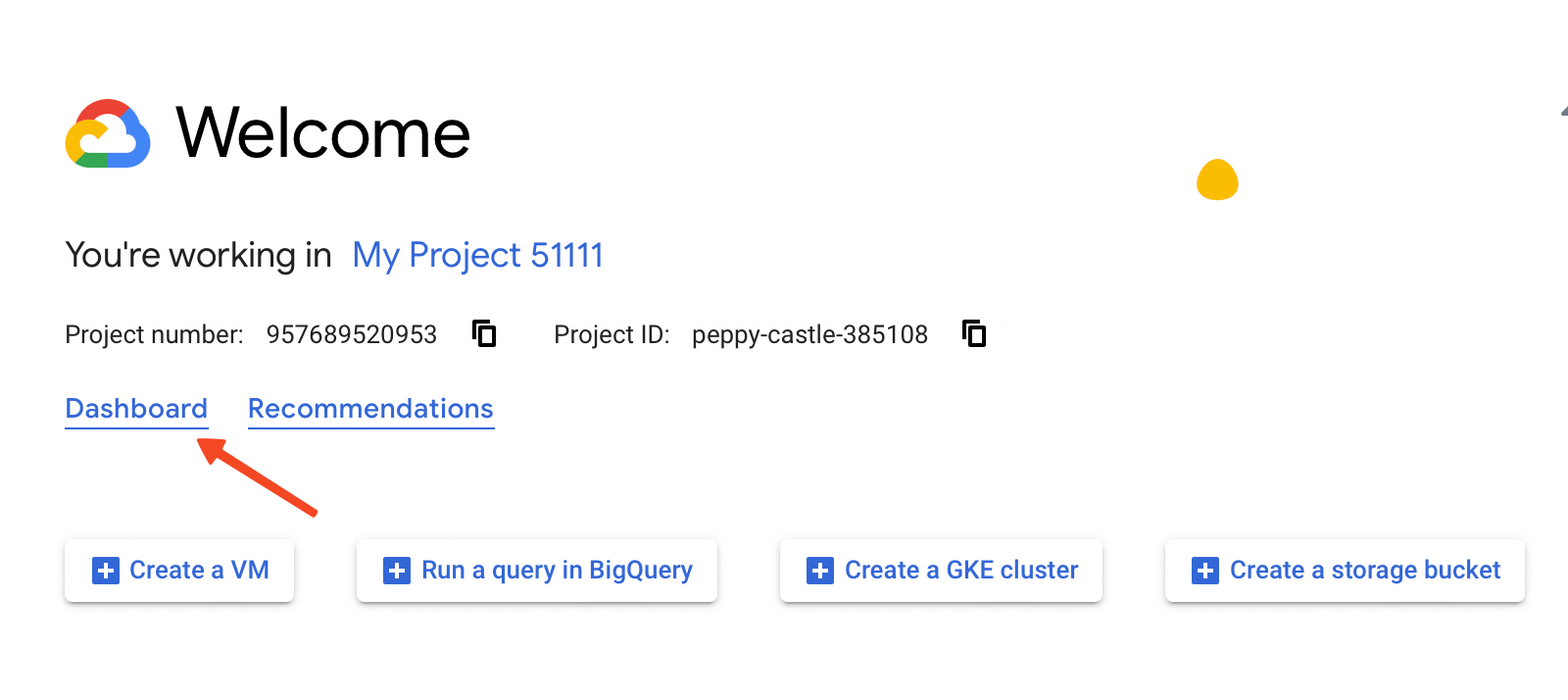

- Click on Dashboard link.

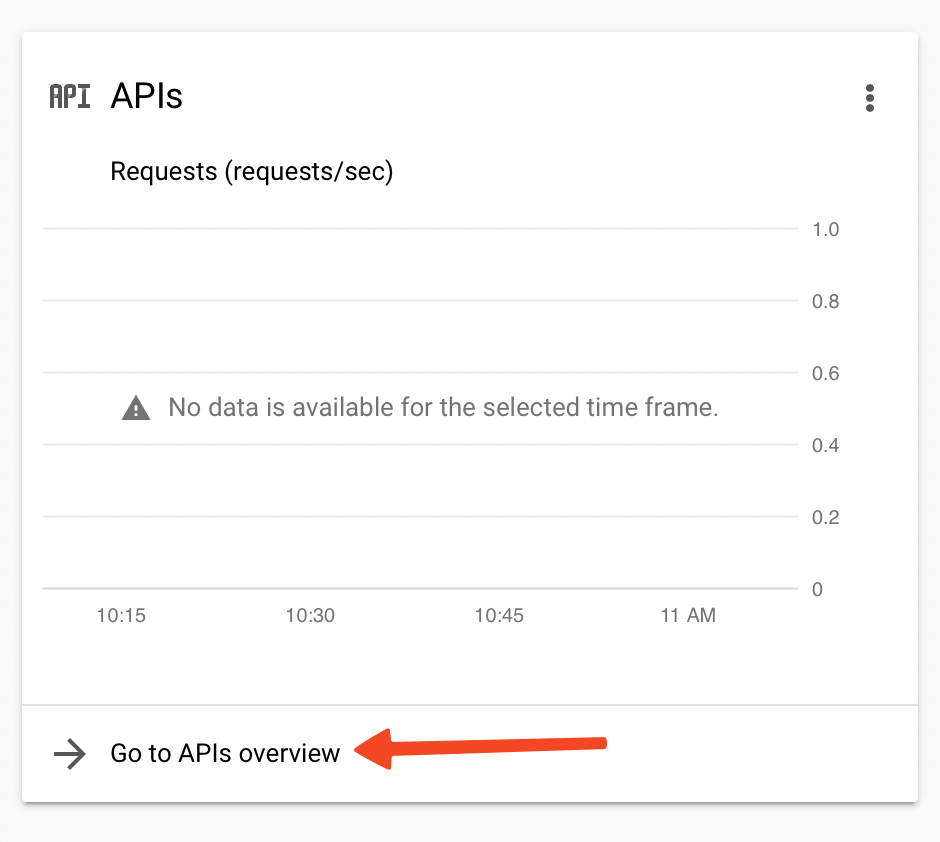

- Click on Go to APIs Overview.

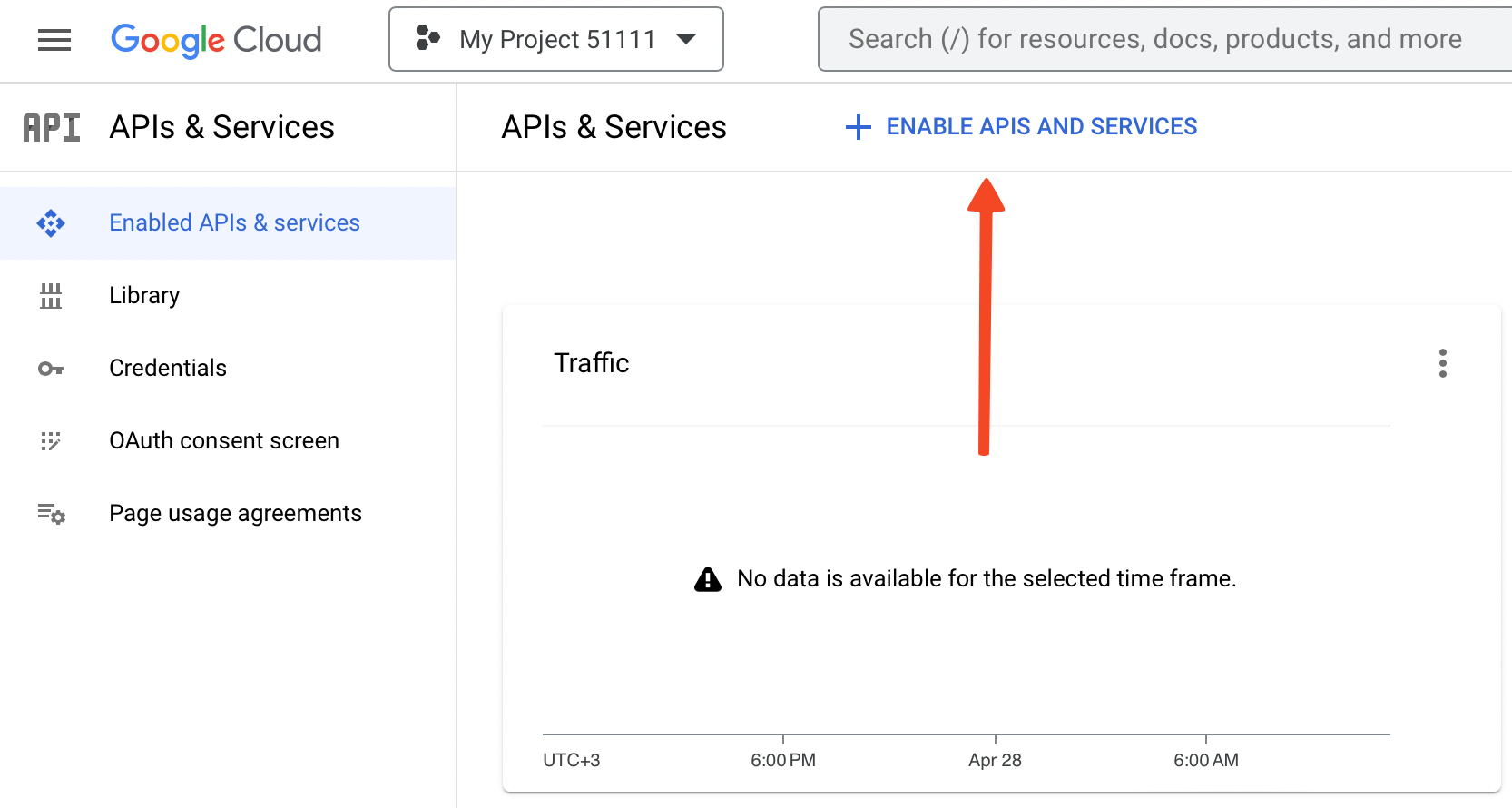

- Click on Enable APIs and Services button.

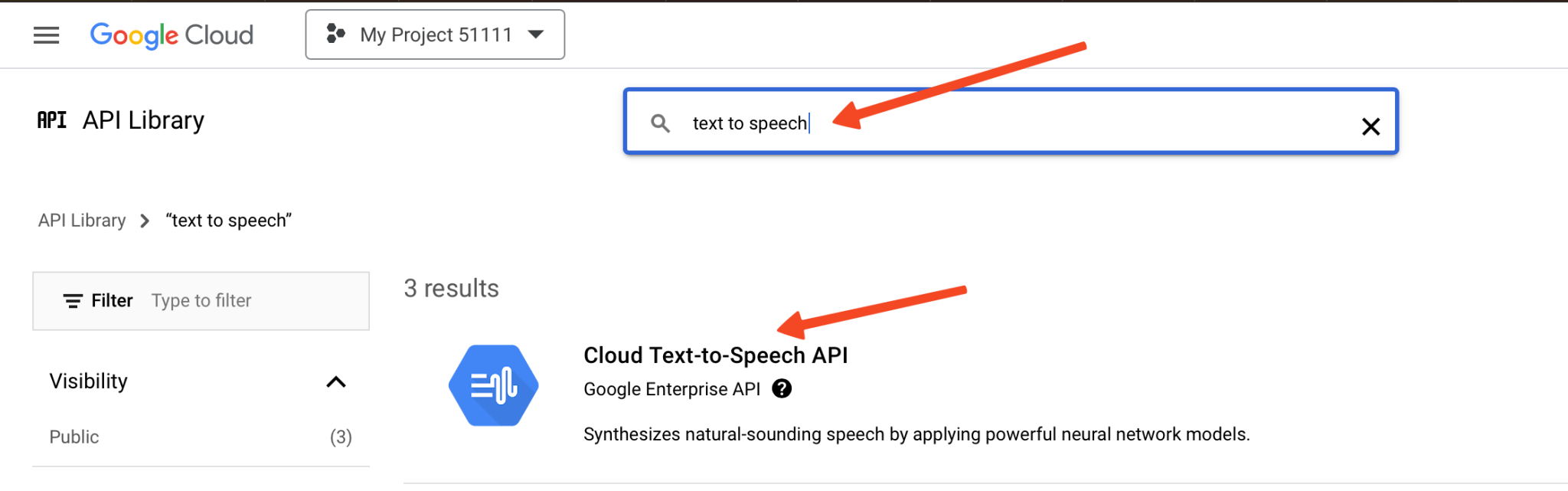

- Search for Text to Speech and select the first option that appears, labelled Cloud Text-to-Speech API.

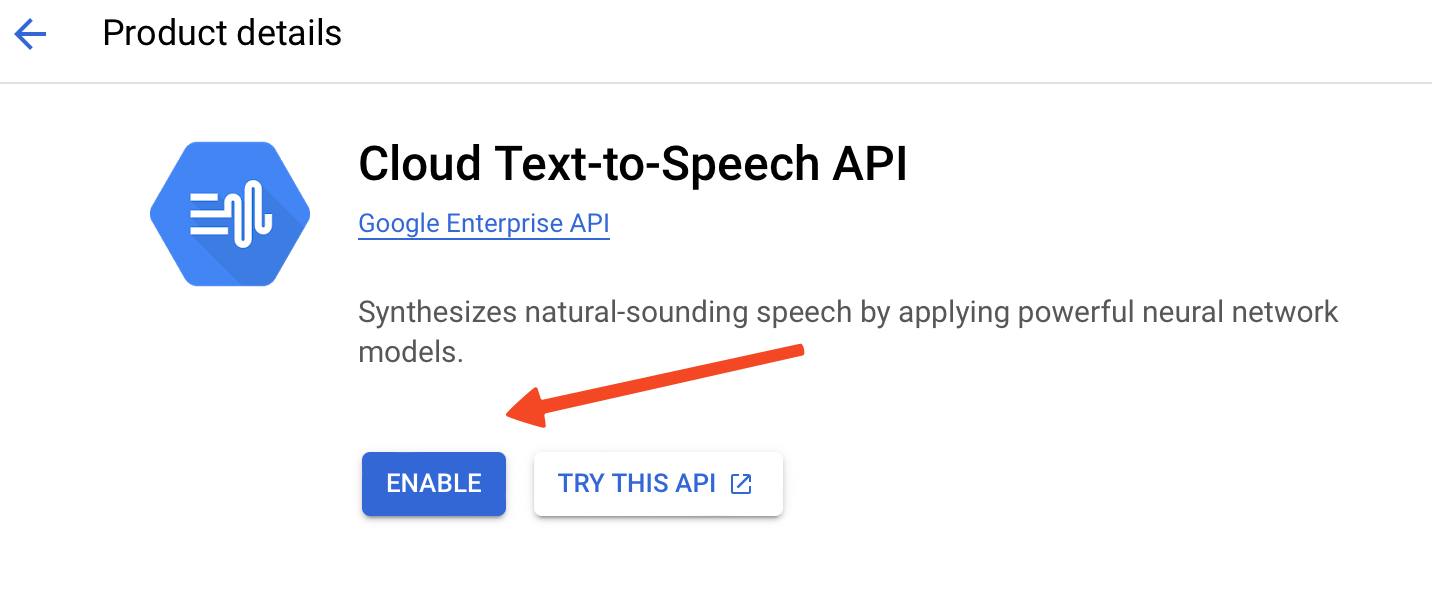

- Click on Enable to enable this API.

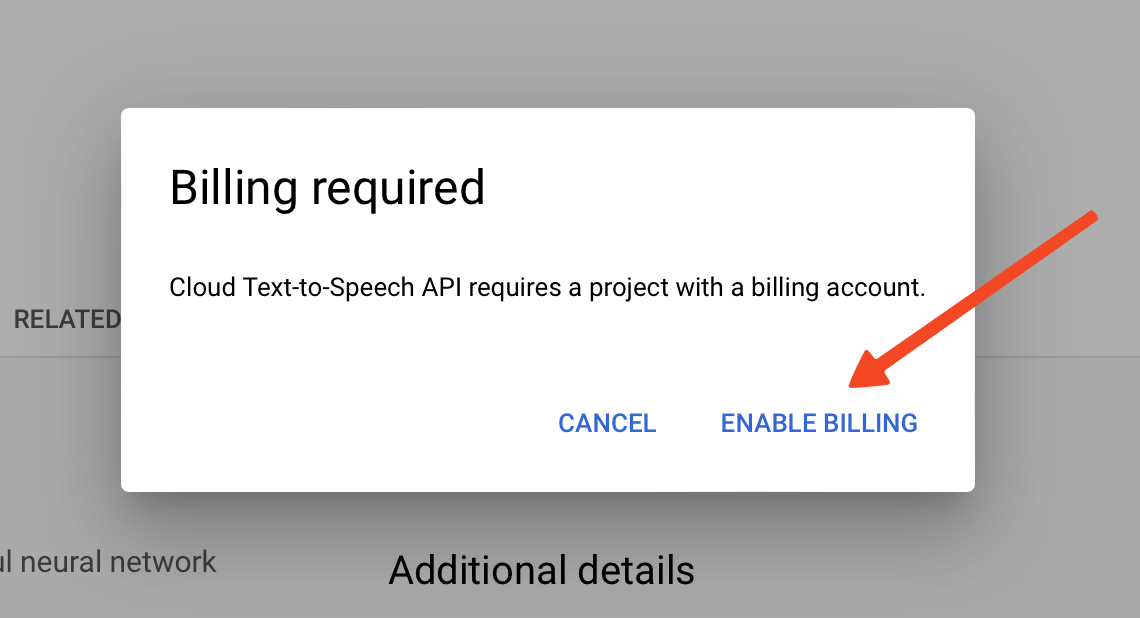

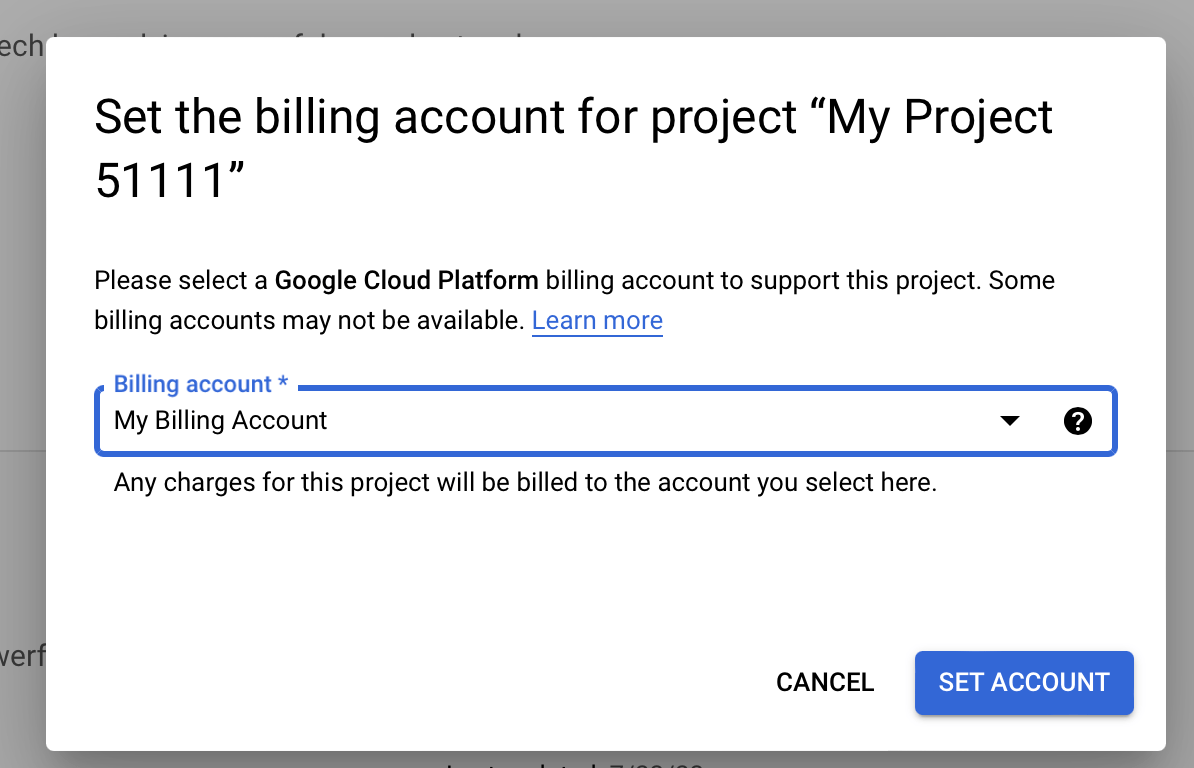

- Please note that you need to activate your Google Cloud Billing Account in order to use this feature. You can learn how to create a billing account here.

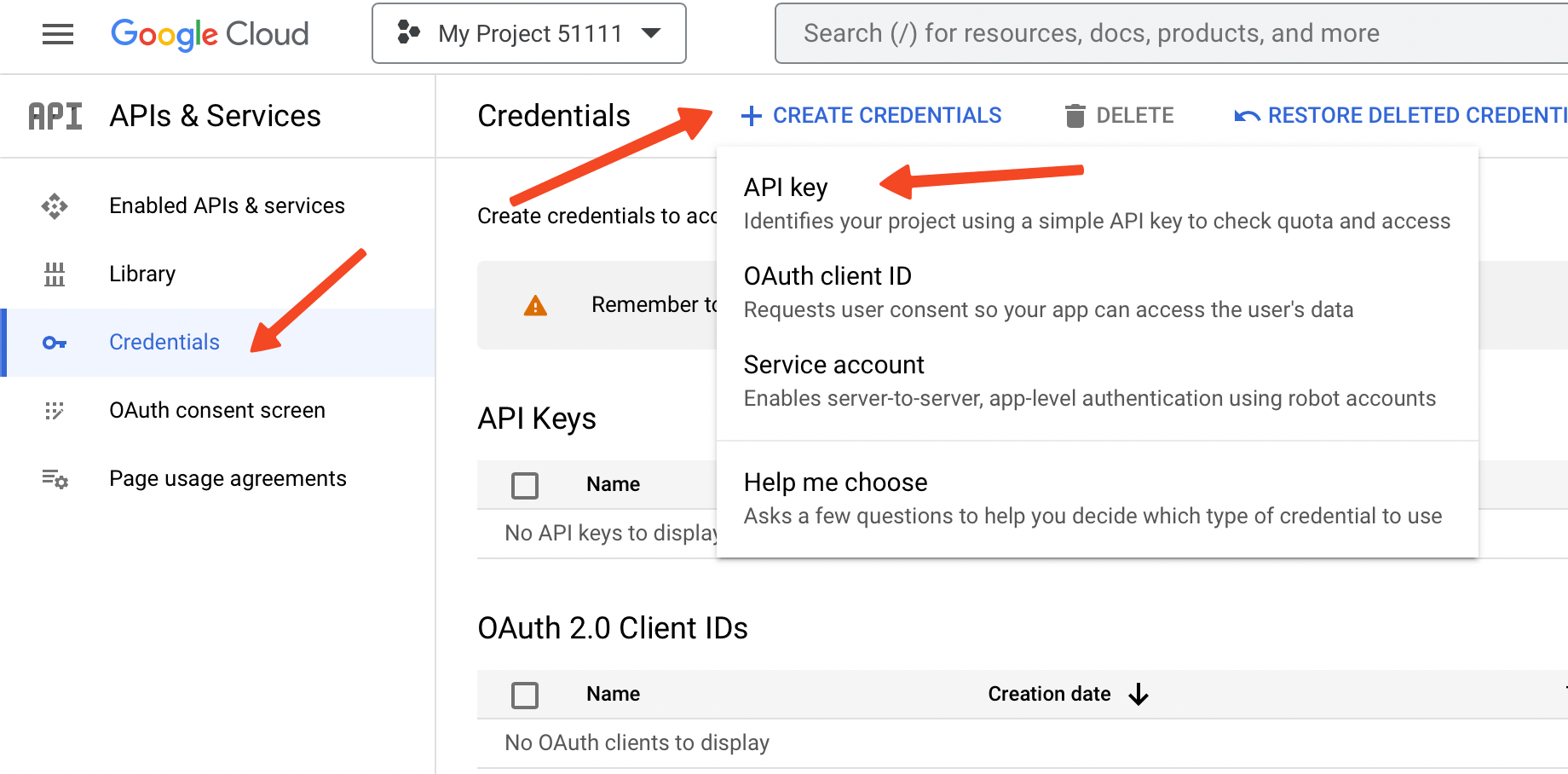

- After successfully setting up your billing account and enabling the API, you’re now ready to create an API key. To accomplish this, navigate to the Credentials page, click on Create Credentials, and then choose API Key.

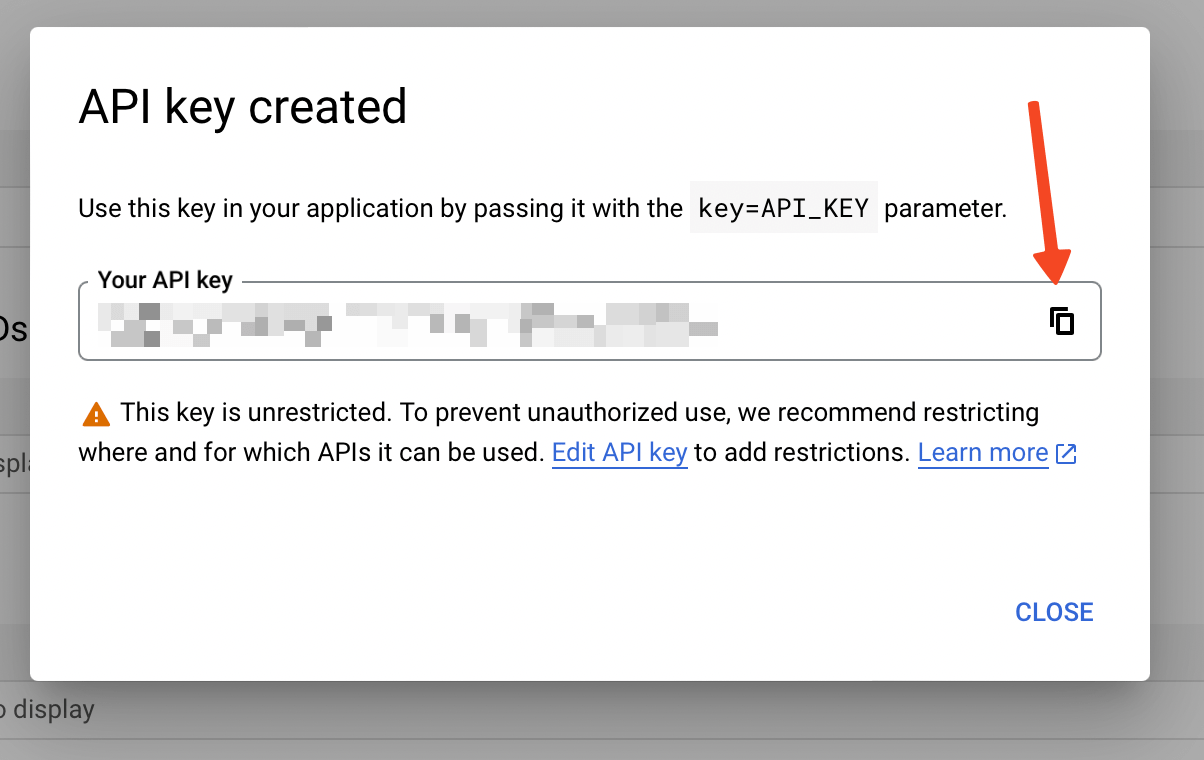

- After your API key has been generated, click on the Copy button to copy it.

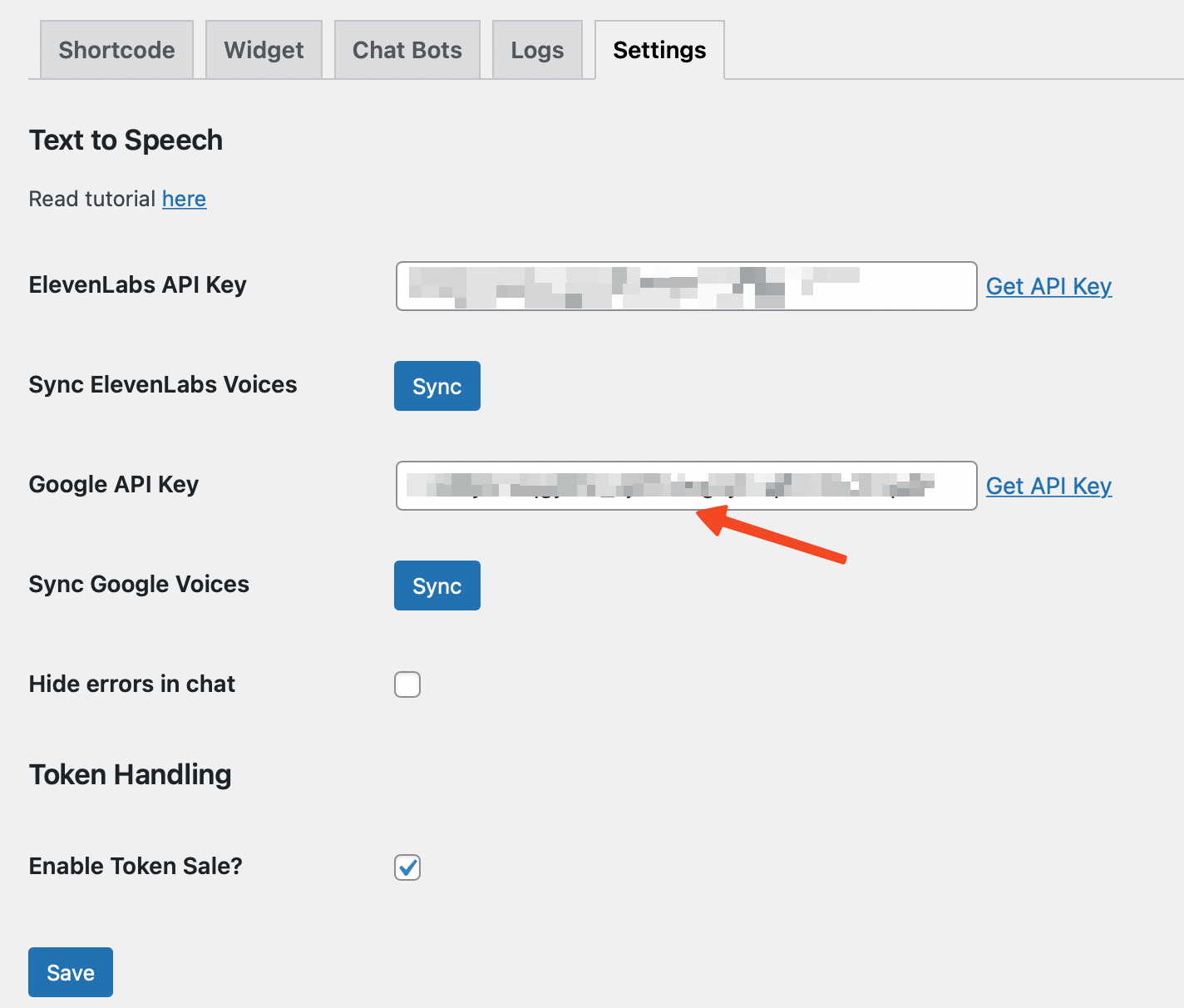

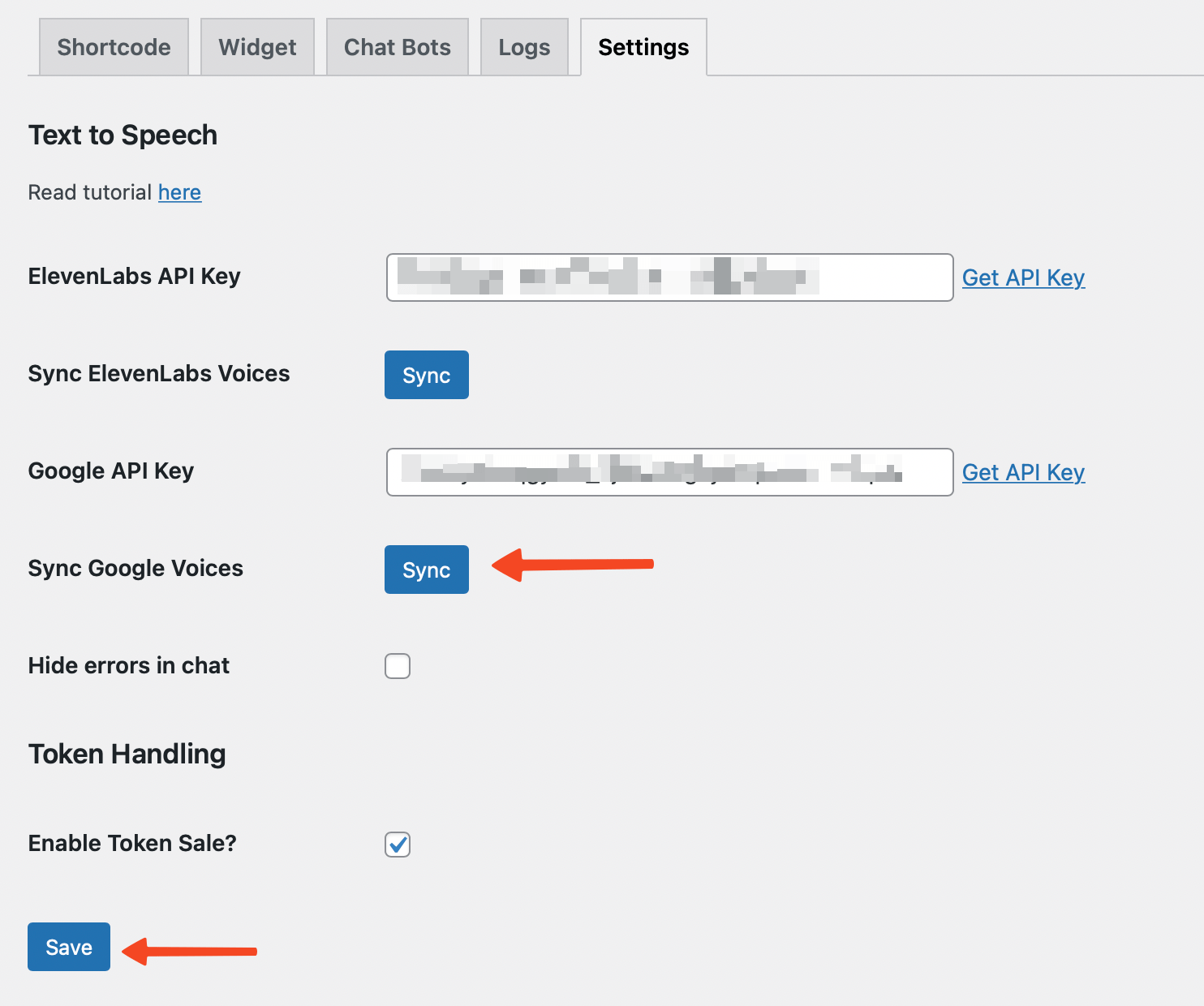

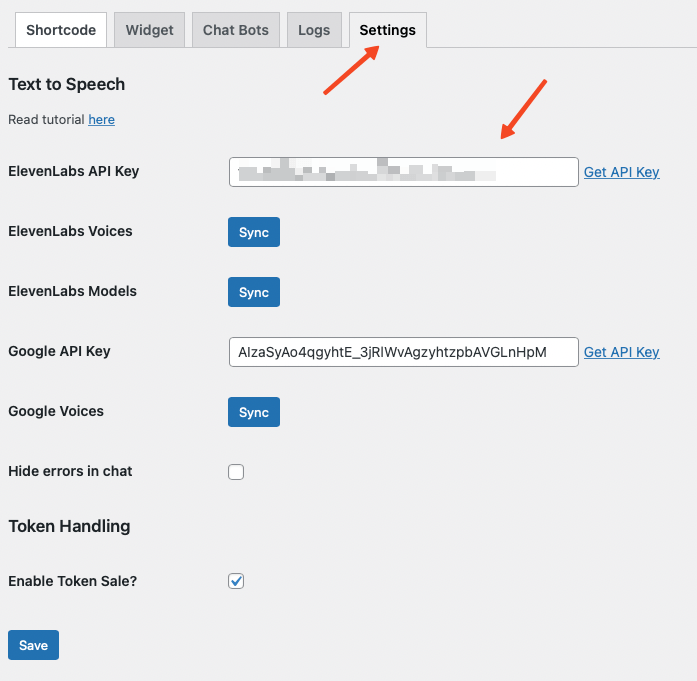

- Once you have your API key, open our plugin’s ChatGPT page. Go to the Settings tab and enter your API key in the provided field, then click Save.

- After saving, a Sync button will appear. Click on it to synchronize the voices with the plugin.

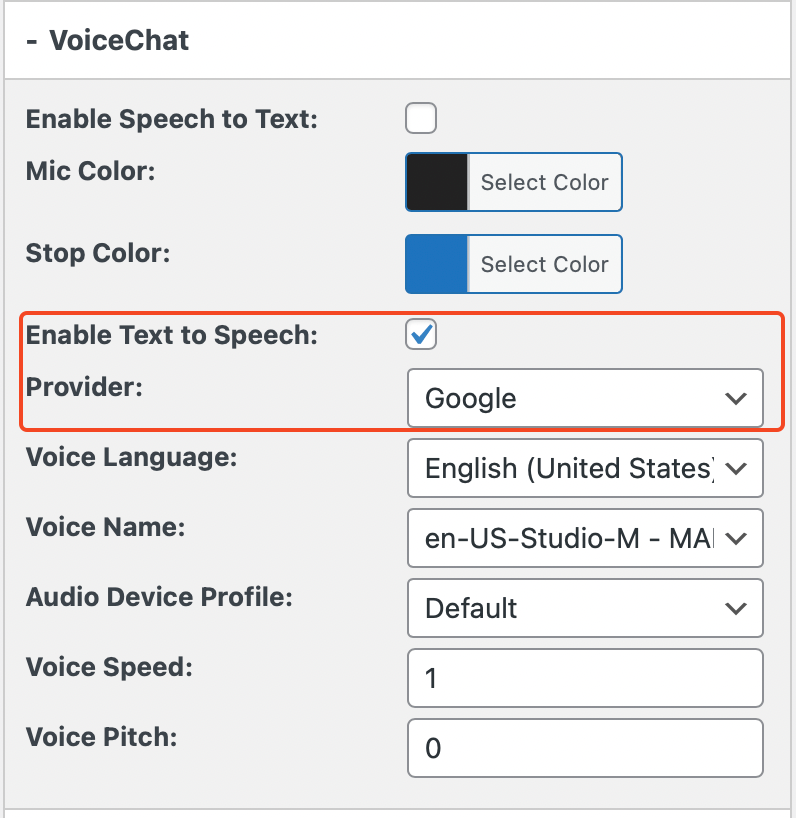

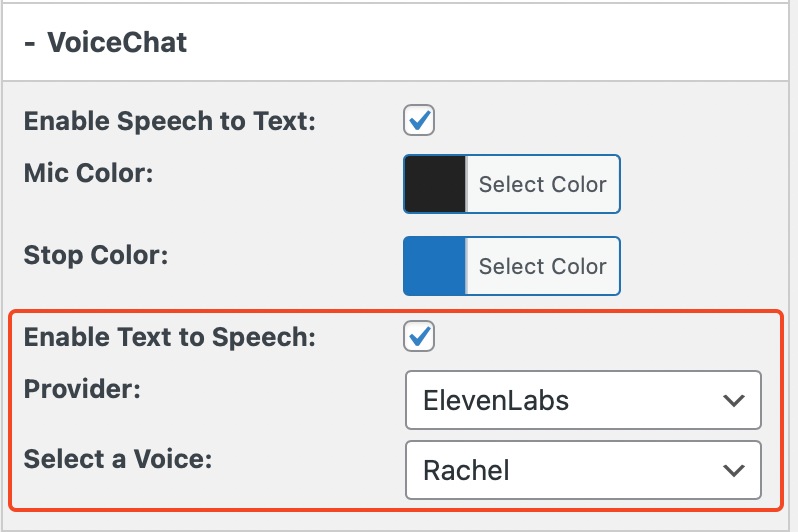

- Now, navigate to your bot’s settings page and locate the VoiceChat tab. Enable the Text to Speech option and select Google from the Provider dropdown menu.

- Upon selecting Google, four new dropdown options will appear:

- Voice Language: This option allows you to select the language in which you want the text to be converted to speech. Google supports a wide range of languages.

- Voice Name: This refers to the specific voice model that you want to use for the speech output. Each language usually has multiple voice models (male, female, variations in accent, etc.).

- Audio Device Profile: This option lets you optimize the audio output for specific types of devices, such as headphones, smartphones, or speakers, to ensure the best possible sound quality.

- Voice Speed: This allows you to control the speed (pace) at which the text is read aloud. A higher value will speed up the speech, while a lower value will slow it down.

- Voice Pitch: This option lets you adjust the tonal quality of the voice. A higher value will make the voice sound higher-pitched (like a chipmunk), while a lower value will make it sound deeper.

- Adjust these settings as desired and click Save.

Congratulations, you have successfully integrated Google Text-to-Speech with your Custom ChatGPT!

Your bot is now equipped to speak with a voice of your choosing.

ElevenLabs

ElevenLabs has an impressive portfolio of voice technology capabilities that include state-of-the-art voice cloning.

Their technology is not only capable of cloning any voice, but also reproducing emotion, making it highly realistic and extremely engaging for user interaction.

One of the standout features of ElevenLabs is the ability to clone your own voice or the voices of famous individuals.

This adds a unique and personalized touch to your chatbot, enabling a more intimate and familiar connection with users.

With ElevenLabs, you can bring your ChatGPT bot to life with dynamic, expressive, and highly realistic voice outputs. Whether it's the familiar voice of a well-known figure or a personalized voice clone, the possibilities are vast, enabling you to tailor your chatbot experience to the precise needs and preferences of your user base.

Here's how you can enable and customize the ElevenLabs integration:

- First, you need to get an API key from ElevenLabs. Visit https://beta.elevenlabs.io/ and register for an account. They offer different plans, including a free plan that allows you to access Long-Form Speech Synthesis, 10,000 characters per month, create up to 3 custom voices, and use Voice Design for random voices.

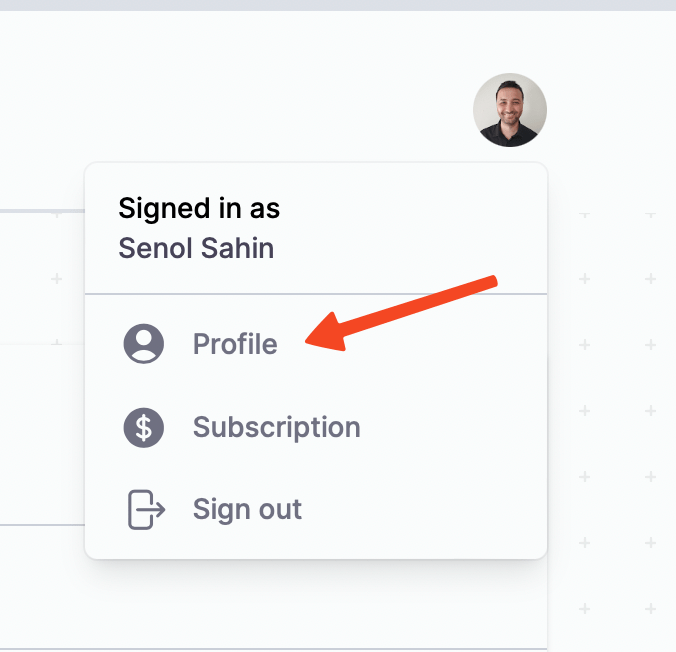

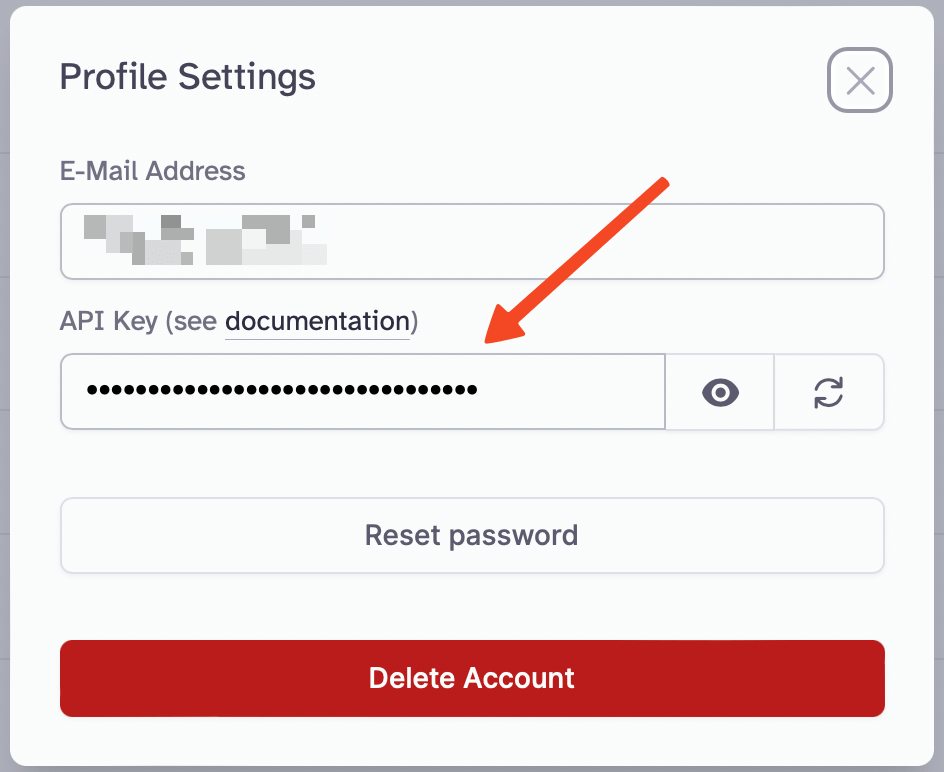

- Once you’ve created your account, click on your profile in the top right corner and select the Profile link.You will find your API key there.

- Copy your API key.

- Head over to our plugin ChatGPT – Settings page. Enter your API key in the designated field.

- In the settings page, you’ll find the option Hide API errors in chat. Enabling this will ensure that users won’t see API errors like “out of quota” or “invalid API key.” Instead, they will only see the chat text from OpenAI without audio from ElevenLabs.

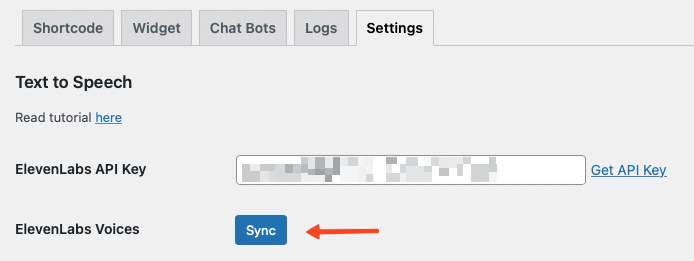

- Save your settings. After saving, click on the Sync button to sync all your custom voices from ElevenLabs. Remember, you can clone any famous person’s voice or even your own!

Now, go to your bot settings page and navigate to the VoiceChat tab. Enable Text to Speech by checking the option, select ElevenLabs from Provider list and then select a voice from the dropdown list. Finally, hit Save.

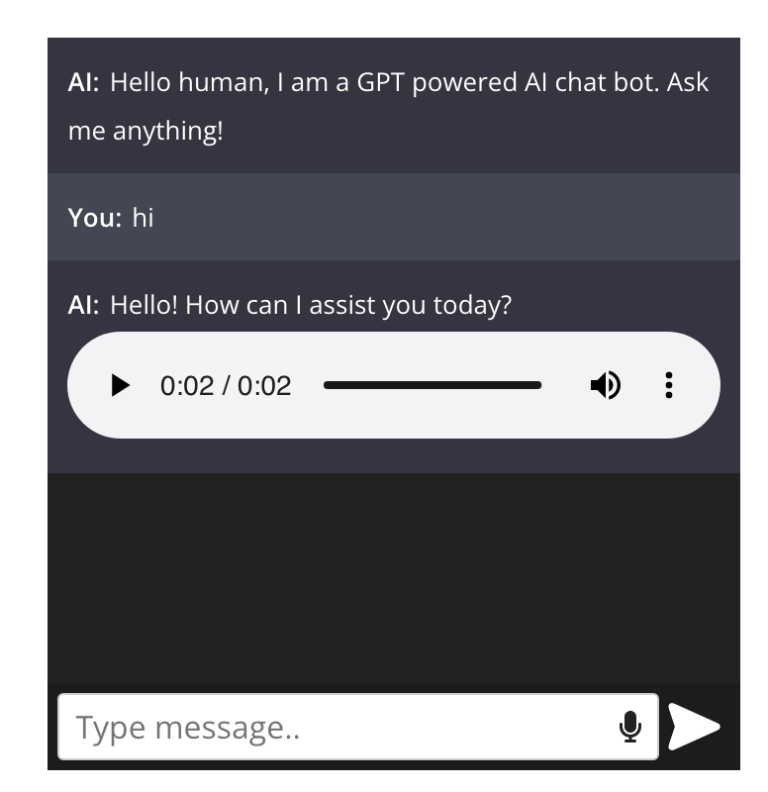

Congratulations! Your bot can now speak.

Go ahead and chat with your bot to experience its new voice capabilities.

The possibilities are endless – you can create a bot that speaks like Steve Jobs and behaves like him by adding special instructions in the Context – Additional Context section and uploading a photo of Steve Jobs.

Voice and Model Synchronization

ElevenLabs offers a powerful and dynamic suite of voices and models for your chat system.

To ensure you're leveraging these features to their fullest potential, it's crucial to keep them synchronized with your plugin settings.

This guide will walk you through syncing voices and models after inputting your ElevenLabs API key.

Syncing Voices

- Saving the API Key: After acquiring your ElevenLabs API key, enter it into the designated field within your plugin settings.

- Click on "Sync Voices": With your API key saved, you'll find a button labeled "Sync Voices". Initiating this will commence the synchronization process, importing all the voices tied to your ElevenLabs account.

- Completion: Post synchronization, all the voices available to you will be seamlessly integrated into your chat system. You can then cherry-pick your desired voice from the dropdown menu.

Voice Settings

Upon successfully synchronizing the voices from ElevenLabs, the plugin automatically pulls in the following voice settings:

Stability: Determines the consistency of the voice output. A higher stability setting ensures the voice remains steady and consistent, avoiding unexpected fluctuations or changes in tone.

Similarity Boost: This setting emphasizes the resemblance of the generated voice to a particular target voice. Elevating the similarity boost will make the synthesized voice sound more like the target.

Speaker Boost: This enhances the distinctive characteristics of a chosen speaker's voice. By increasing the speaker boost, the synthesized voice will embody more of the unique nuances and idiosyncrasies of the target speaker.

Style: Reflects the tone and manner of the generated voice. Whether you want a voice that's cheerful, serious, or neutral, adjusting the style allows you to fine-tune the emotion and demeanor of the voice output.

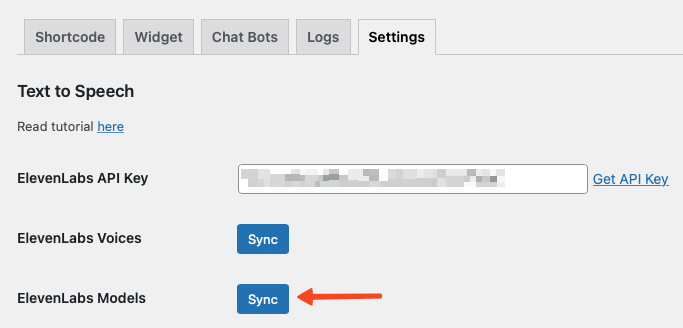

Syncing Models

- Saving the API Key: If not done already, ensure your ElevenLabs API key is stored.

- Click on "Sync Models": Adjacent to the "Sync Voices" button, you'll spot another labeled "Sync Models". Activating this will fetch all models associated with your ElevenLabs account.

- Completion: Post synchronization, you're set to deploy these models within your chat infrastructure.

Understanding Models

At present, two primary models are at your disposal:

- Eleven English v1: Standard English language model to generate speech in a variety of voices, styles and moods.

- Eleven Multilingual v1: Generate lifelike speech in multiple languages and create content that resonates with a broader audience. Languages: English (USA), English (UK), English (Australia), English (Canada), German, Polish Spanish (Spain), Spanish (Mexico), Italian, French (France), French (Canada), Portuguese (Portgual), Portuguese (Brazil) and Hindi.

Limitations

While the new ElevenLabs voice integration with ChatGPT brings exciting possibilities for chatbot creators, it’s important to be aware of its limitations.

Understanding these restrictions will help you make informed decisions when using this feature.

- Multilingual v1 Limitations: Some numbers and symbols may currently be pronounced incorrectly. The generated speech can be unstable if the text exceeds 1000 characters.

- API Errors: If you choose to hide API errors in chat, users won’t see errors like “out of quota” or “invalid API key.” Instead, they will only see the chat text from OpenAI without any audio from ElevenLabs. This could lead to confusion if users expect to hear a voice response.

- Quota Restrictions: Depending on the ElevenLabs plan you choose, you may face limitations in terms of characters per month, custom voices, and commercial licensing. The free plan, for example, offers 10,000 characters per month and up to 3 custom voices without a commercial license.

By being aware of these limitations, you can make the most of the ElevenLabs voice integration with ChatGPT and create unique, engaging experiences for your chatbot users.